Bucket Policies are one of the key element when we have talk about security and compliance while using AWS S3 buckets to host our static contents.

In this post, below are the some code snippets of most used bucket policy documents.

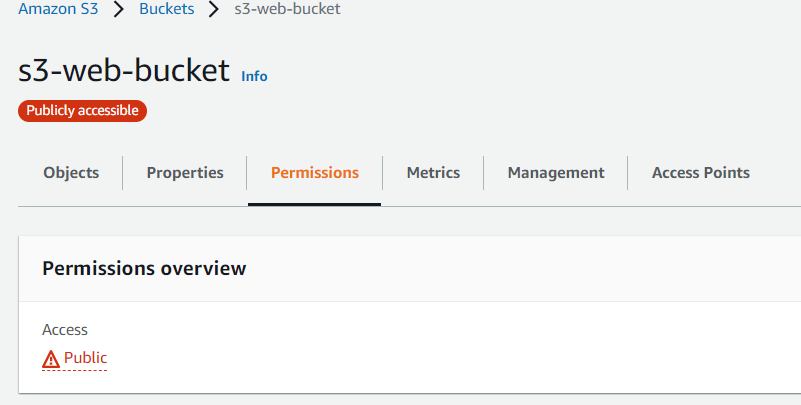

Policy 1 : Enable Public Read access to Bucket objects.

Turing OFF the Public Access Check from S3 bucket permission tab is not sufficient to enable public read access, additionally you need to add below bucket policy statement to enable.

{

"Version":"2012-10-17",

"Statement":[

{

"Sid":"EnablePublicRead",

"Effect":"Allow",

"Principal": "*",

"Action":["s3:GetObject"],

"Resource":["arn:aws:s3:::ck-public-demo-bucket/*"]

}

]

}Policy 2 : Allow only HTTPs Connections.

{

"Id": "ExamplePolicy",

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowOnlySSLRequests",

"Action": "s3:GetObject",

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::ck-public-demo-bucket/*"

],

"Condition": {

"Bool": {

"aws:SecureTransport": "true"

}

},

"Principal": "*"

}

]

}Policy 3 : Allow access from a specific or range of IP address.

{ "Version": "2012-10-17", "Id": "AllowOnlyIpS3Policy", "Statement": [ { "Sid": "AllowOnlyIp",

"Effect": "Deny", "Principal": "*", "Action": "s3:*", "Resource": [ "arn:aws:s3:::ck-public-demo-bucket", "arn:aws:s3:::ck-public-demo-bucket/*" ], "Condition": { "NotIpAddress": {"aws:SourceIp": "12.345.67.89/32"} } } ] }

Policy 4 : Cross Account Bucket access Policy.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::REPLACE-WITH-YOUR-AWS-CROSS-ACCOUNT-NUMBER:root"

},

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": [

"arn:aws:s3:::ck-public-demo-bucket/*"

]

}

]

}

aws s3 cp demo-file.txt s3://ck-public-demo-bucket/ --acl bucket-owner-full-control